Talking Us To Death: How AI Could Lead To Humanity’s Most Conceivably Boring End

Originally Published July 25, 2023

I recently watched Tom Cruise run real fast for the seventh time.

Actually, he runs in more than just Mission:Impossible movies, so let's call it the twentieth time. I’ve seen a lot of Tom Cruise movies, and I am unashamed.

MI:Dead Reckoning (Part 1) was both excellent and (repeatedly) unintentionally hilarious.

Definitely see it with someone you are obligated to hang out with but can’t stand talking to for more than a few minutes. This way, you can meet at the theater, say hello, then sit in silence for two and a half hours before you say you are sorry, but you can’t get a drink afterward because you need to go home and wash your hair.

I’m sure you have heard by now, or maybe not if you aren’t as culturally tapped in via a straight content drip to the arteries as I am, but the villain of the most recent installment of Tom Cruise Running is quite traditional, even though I’ve seen this particular evildoer described as non-traditional in press coverage.

No spoilers, of course, but the foil is a well-trod cinematic trope who (insert Phil Hartman / Troy McLure voice here) “you might remember from such films as”; The Terminator, 2001: A Space Odyssey, The Matrix, Ex Machina, Blade Runner, TRON, WarGames, and Ghost In The Shell, or TV shows like Westworld, Devs, Star Trek, and Mrs. Davis.

*** COUGH COUGH * THE ANTAGONIST IS A MURDEROUS ROGUE AI * COUGH COUGH ***

Sorry for spoiling the first three minutes of the movie for you if you haven’t graced the cinema in the past week or so, but it got me thinking about why mass death via Homicidal Autonomous Artificial General Intelligence is so compelling.

👺 I (and we all) shall now refer to Homicidal Autonomous Artificial General Intelligence as ”HAAGI” because it sounds like either a limited edition Swedish Meatball from IKEA, or a particle board nightstand, also from IKEA.

Free market capitalism and the entertainment it produces clearly finds the idea of HAAGI as the dastardly (strokes mustache) foe in visual media to be compelling because it makes money. Which is ironic considering the free market is definitely going to be the first thing to go once the robots get all stabby stabby shooty shooty. And we the audience in turn find HAAGI compelling because it feels so fantastical and decidedly un-IRL, at least until recently.

But what if it’s not HAAGI that gets us in the end? (The killer robots, not the potential IKEA products). What if, despite all hand-wringing and cloth-rending by techno-philosophers, politicians, and un-ironically by the very people building the products that may lead to an actual HAAGI scenario, there is something we are missing? What if we have all been mind-poisoned by our collective cinematic experience into overlooking something that should be more obvious, but is much less sinister? What if it’s not HAAGI that spells our doom, but instead, the thing that does us fleshy humans in, is the thing we all hate more than anything else in the world:

Email.

While you nod affirmatively at our mutual hatred of email, let's crunch some numbers in a feature called ‘Bad Math.’ (Insert future ‘Bad Math’ theme song here).

I should note that I am quite awful at math generally. I have not taken a class focused on numbers since my junior year of high school and I’m getting so old that we used an abacus. Fortunately for me, the Internet is full of stupid numbers for me to manipulate in irrational ways for your entertainment as truly outrageous hypothetical scenarios that almost certainly won’t (maybe won’t) ever occur.

Consider the following:

✉️ In 2022, there were around 330 billion emails sent per day. It increases about 1% every year. (Statista)

⚠️ In 2022, 49% of emails were categorized as spam. (Statista)

🌏 In 2019, it was estimated, by the Father of the Internet no less, Tim Berners-Lee, that email accounts for about 150m tonnes CO2e, or about 0.3% of the world’s total carbon footprint. (Carbon Literacy)

🔌 In 2018, our beloved email sending devices (personal electronics) used around 1-2% of the global electricity supply.

In 2020, it was around 4–6%.

By 2030, it is estimated to be around 8-21%. (University of Pennsylvania)

🤖 It is estimated that OpenAI’s ChatGPT-4 Large Language Model (”LLM”) used about 7.5 megawatt-hours (MWh) of energy for it’s training, the same amount of yearly energy consumption of a combined approximately 700 U.S. households.

It is additionally estimated running GPT-4 models would require about 8 MWh of energy per year, or around 750 U.S. households (Nature Communications)

📞 As of 2023, there were approximately 2.88 million persons holding customer service representative jobs in the United States. (DataUSA)

If you can’t see where I am going with this, you either haven’t had your coffee yet, don’t know me well enough, or you’re too busy prompting GPT-4 to write that difficult email to your colleagues about not microwaving salmon in the office kitchen, which is GPT-4’s best use case as far as I’m aware.

Put the numbers aside momentarily and let’s assume the following will happen, or is already happening:

- Email spammers will use LLMs to send more spam email. #alreadyhappening

- Legitimate companies will use LLMs to send more (and increasingly less legitimate) marketing emails. #alreadyhappening

- More and larger LLMs will be trained by more and larger AI companies. #alreadyhappening

- Society will continue to use increasingly massive amounts of energy. #alreadyhappening

- Most customer support roles will be replaced by AI. #alreadyhappening

Okay, so, I lied. None of that is actually an assumption because it’s all already happening.

Let’s also assume Moore’s Law is in effect. Named after Intel Co-Founder, Gordon Moore, the short version is that chip technology doubles in computing power every two-ish years. He came up with this in 1965 and so far has not only been correct, we have recently entered an era almost exponential increase in that doubling of power when it comes to training artificial intelligence.

People like to apply Moore’s Law to all sorts of things in technology to sound more intelligent than they actually are, almost exactly like I am doing here.

So, assuming all of the above, and also assuming Moore’s Law is applied to those variables, by way of utterly insane extrapolation of the initial values, the end result is not a HAAGI scenario, but one in which we have so many AI chatbots gabbing at each other in truly endless ouroboros conversations that we quite literally would use up all the world’s energy and computing power within a decade, essentially because “AI-be-talking”.

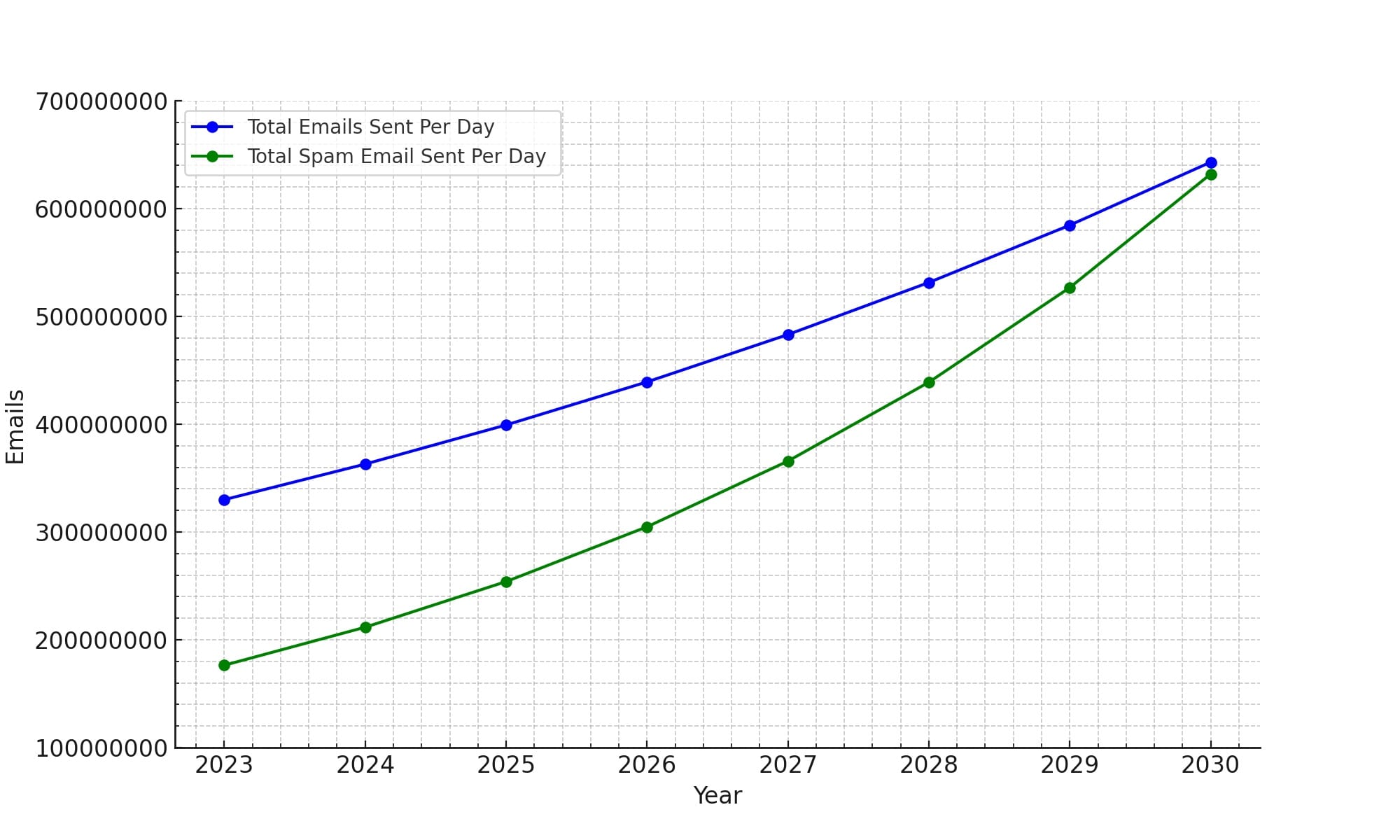

Let’s look at some exceptionally Bad Math charts to visualize this.

🥸 Note once again that I am not a researcher or knighted academic. That said, I did take real world numbers (see above) from some light research done in the wee hours after my infant daughter falls asleep, and used those numbers as a basis. I then extrapolated moderate to wildly exponential and completely made up increases YOY through 2030. If you are a real researcher, well, bully for you, someone should pay you to do this as a true peer reviewed study you can publish somewhere and get tenure so people can throw inkwell pens at you in the cafeteria for your tremendous achievement.

Total Amount of Email and Spam Sent Per Day

📈 In our maybe not hypothetical scenario where AI is just sending way more mail, which it already is, if we increase the volume of email by just 10% YOY per day, and also increase the volume of spam by 20% YOY per day, because if nothing else is certain it will go up by some amount, then the number of spam emails will be roughly equivalent to the number of total emails sent per day by 2030, with 640 billion emails and spam, each, per day, for a combined total of 1.28 trillion sent each day, and 467.2 trillion combined emails and spam sent annually.

(Darth Vader Voice) I can feel your hate (for email) growing!

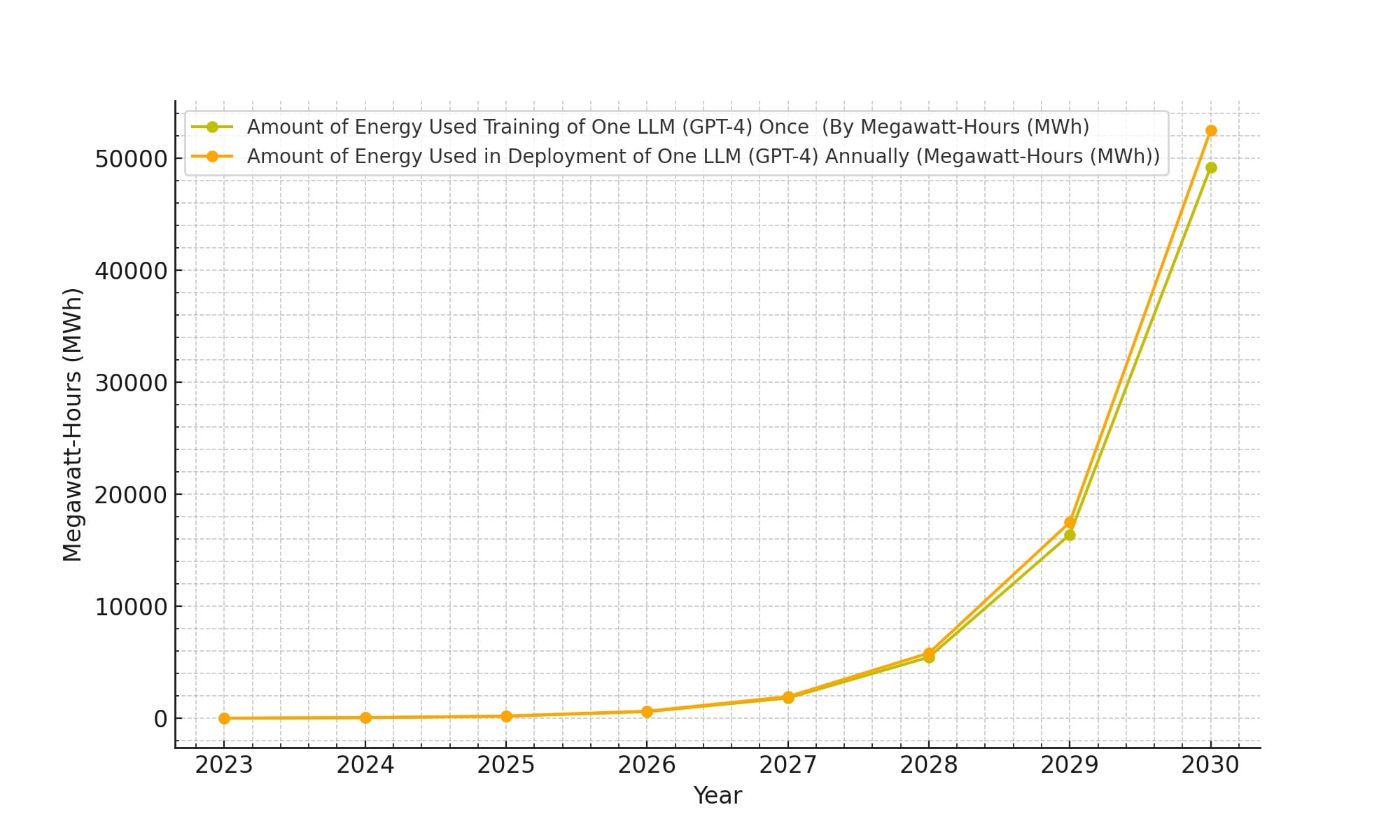

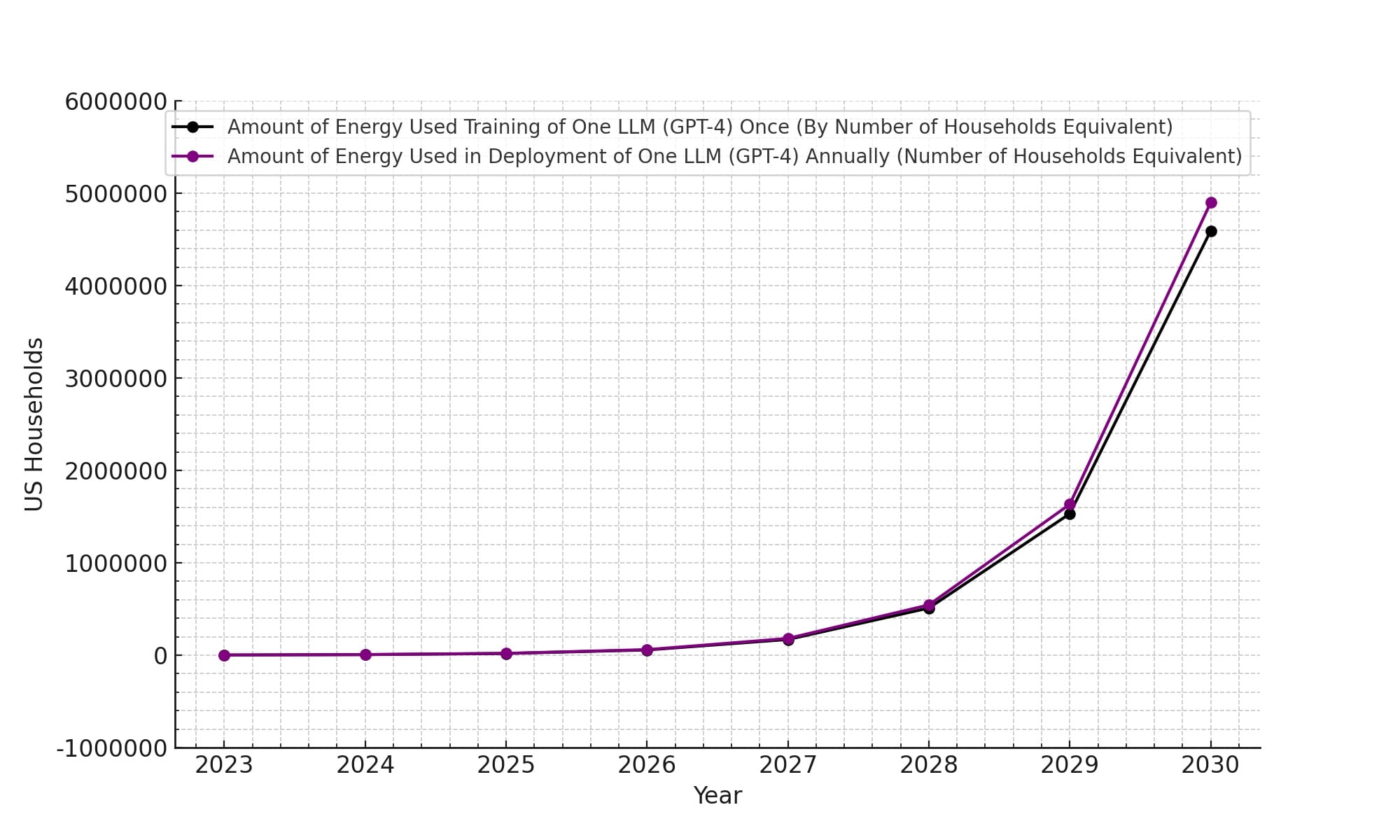

Annual Training and Deployment of LLMs by MWh and U.S. Household Energy Equivalent

LLMs do not train and run themselves as we saw in the initial numbers above, so let’s assume both the requirements for training and deploying ONE model like GPT-4 will increase in power by 2x each year, slightly faster than Moore’s Law because, at least right now, it seems to be accelerating faster than 2x every 2 years for AI.

📈 By 2030, we reach a total MWh equivalent of nearly 5 million U.S. households in for training each comparative models ONCE, and then another for 5 million households of MWh equivalence for running them annually. Again this would be for ONE LLM the size and scope of GPT-4 like Anthropic’s Claude, Meta’s LLAMA, or Google’s Bard, amongst many many others, and there will be many many many others.

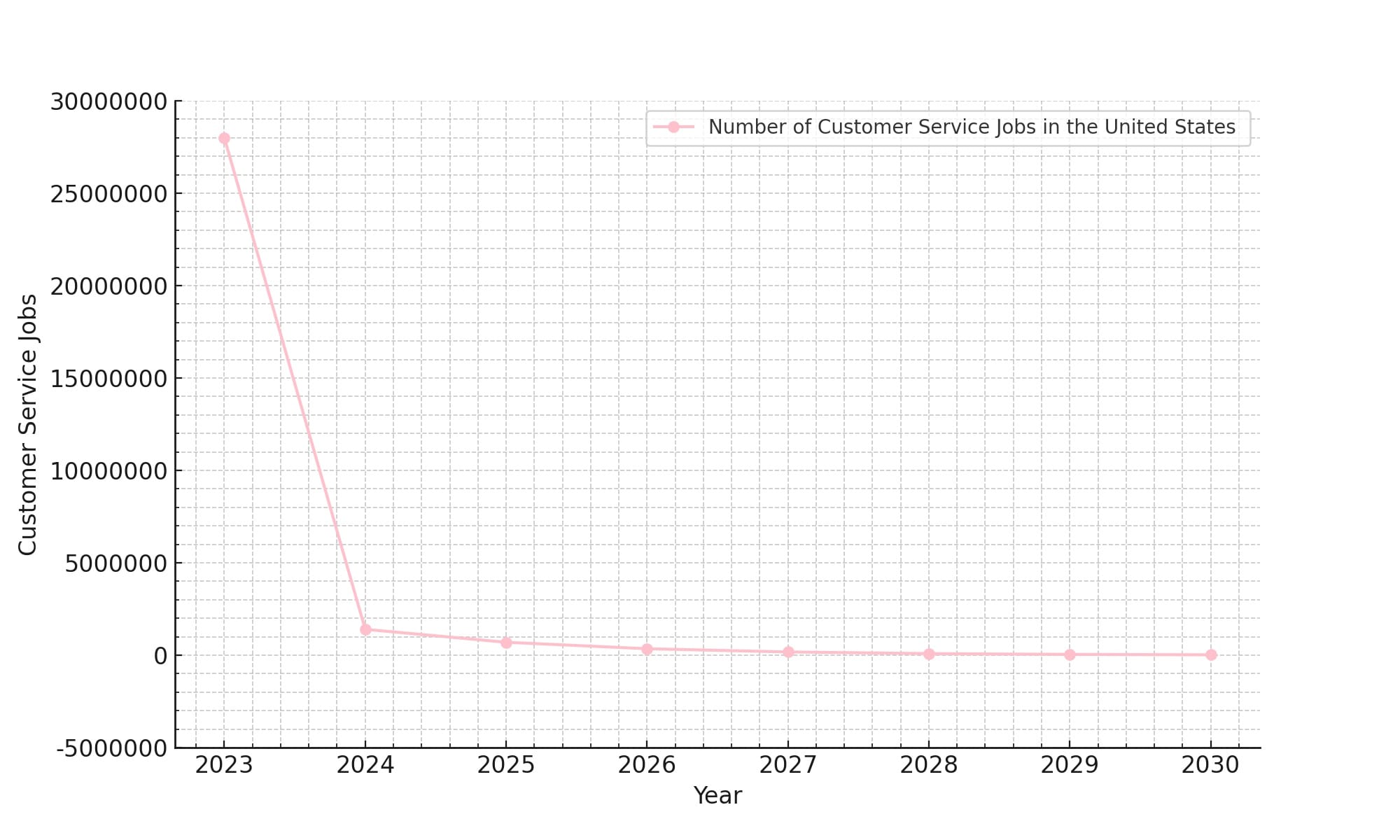

Number of Customer Service Jobs in the U.S.

📉 Finally assuming a precipitous decrease of real humans doing customer service work, such as replying to emails, chats, or phone calls, due to the correlated increase in LLMs and their adoption at scale, there will be near zero humans doing any sort of written or spoken customer service work not in 2030, but in the year 2024, if we decrease the number by half every year. In 2030 there will be .02 humans still doing customer service. And I don’t mean 2% of the current amount, I mean .02 of one (1) single unit of human.

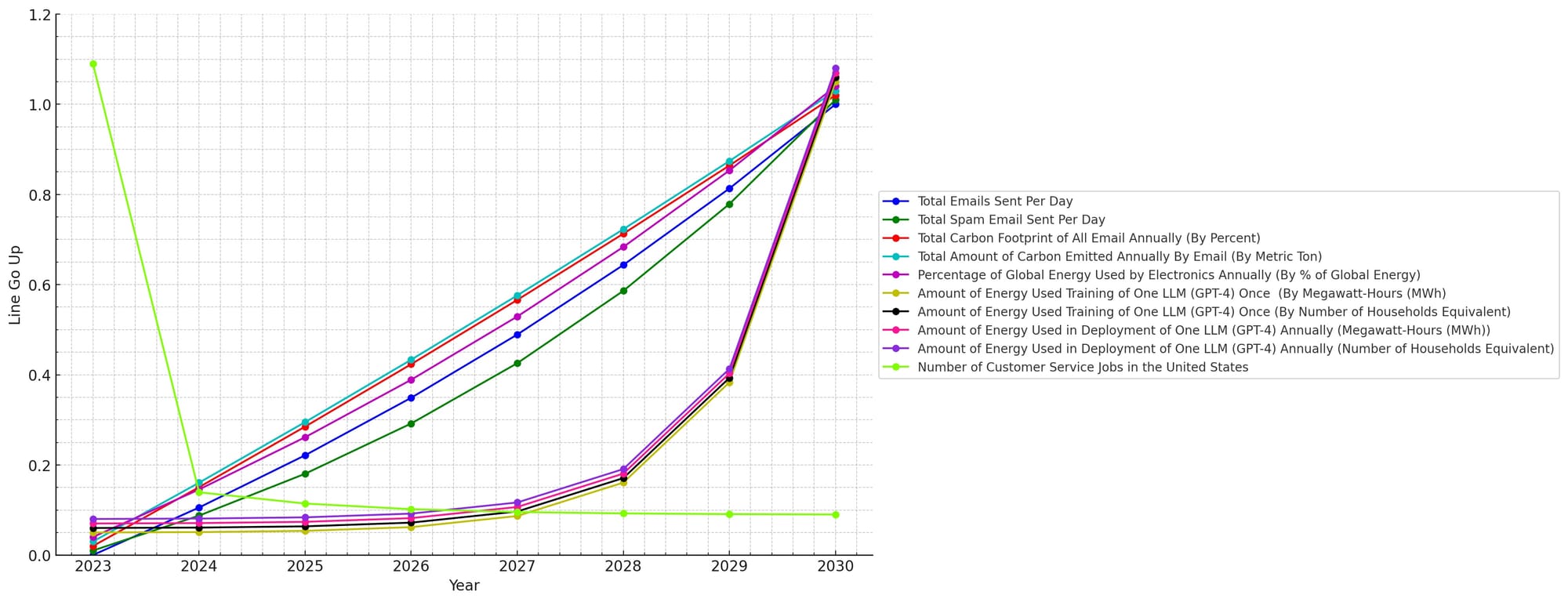

Overlaid Mega Chart of Humankind’s Doom

For funsies, here’s all of the charts above overlaid, plus some additional data charted from extrapolations of similar types of data from the original real basis data (further above) to make the chart look extra silly.

While the HAAGI scenario from sci-fi may yet happen (lots of editorials/interviews/strongly-worded-letters by the people building these AI systems seem to think so), and we may soon all be forced by into a human centipede chain that encircles the world while shining chrome jackboots, the scenario of “Death by Email” actually seems much more likely, at least in the near term.

It is easy to imagine the following:

- Person A loses their job at a call center as a customer service representative for the ACME Widget (Vibrator) Factory and is replaced by AI (”Robot 1”)

- Robot 1 is tireless. Robot 1 works 7 days a week and twice on Sundays.

- Robot 2 is an AI chatbots used by the assorted customers of the ACME Widget/Vibrator Factory to try and get refunds for their malfunctioning “widgets”.

- Robot 1 has been programmed only to never issue refunds.

- Robot 2 has been programmed to not give up until a refund is issued.

- Robot 1 and Robot 2 engage in a polite email exchange for the rest of time debating the merits of widget-vibrators on human sexuality and whether or not refunds are appropriate if the product is outside of the 30 day warranty window and has been immersed in, uh, “fluids”.

- This happens at the scale of the entire world. In every customer service department. And every single spam operation. And every single inbox.

It is wholly possible, if not probable, that we will quite literally run out of both energy and computing power/storage because of rampant AI’s just trying to get things from each other via email in their capacity as customer service slaves, and/or trying steal your credit card information via phishing once we all have our own personal email chatbots to respond to our incomings.

Is this all ridiculous? Of course it is, but is it any more ridiculous than your door being kicked in by a sentient HAAGI robot coming to kill you for your cavity fillings so that it can make more paperclips?

If email and spam both increase exponentially because of large language models (they are);

And if there are more and more LLMs trained and deployed (they are);

And the energy and compute consumed by both of those things increases exponentially (it is);

Then our species will expire not via high-powered lasers shot from the eyes of a HAAGI imposter-bot of your grandma who tricks you into disintegration with promise of those chocolate chip cookies you love, which the HAAGI learned by reading your Facebook posts from 2009 which were a part of it’s training data.

Instead, we will burn every energy source available in colossal data centers just to keep our perfectly benign AI chatbots running, serviceable, and happily chatting with each other, while all of the former customer service representatives of the world engage in mortal combat with rusty spoons (S/O Salad Fingers) for the last slice of ham from the Subway across from the street from their old call center.

Probable? Maybe. Possible? Definitely maybe.

However fun the science fiction versions of HAAGI are to watch and read about, I fear our ultimate demise will be much like the rest of human life outside of the science-fiction of our entertainment; quite fucking boring.

And it’s usually the boring stuff that’s actually worth thinking about.

wtflolwhy :: July 25, 2023