All Your Face Are Belong to Us: Welcome to the Age of Non-Consent, Where Your Personal Biometric Autonomy Can, and Will, Be Violated to Create Non-Consensual Pornography of You, at Will, in Seconds

Originally Published November 15, 2023

Fear of the unknown is powerful. It could be argued that fear of the unknown is the root cause of most of or all human suffering depending on your level of cynicism. People fear what they don’t understand and consequently resist what they don’t understand because they fear it. An Ouroboros tied with a Gordian Knot. Such apprehension of often undefined matters which may or may not actually occur is acutely felt with leaps in technological innovation. I’ve previously written on literature, film, and TV’s fascination with writing Homicidal Autonomous Artificial General Intelligence as antagonists, a trope that shows no signs of going inert. The Terminator is a compelling and thought-provoking film, and Boston Dynamics makes adorable articulately limbed robots that dance to pop music which may one day themselves become Terminators, but neither scenario, however likely, captures the reality of, well, reality.

That which we are experiencing right NOW lacks context because we haven’t had the time to contextualize it yet. It’s still happening. With paradigmatic technologies, it can be a number of years, sometimes decades, until we fully understand the applicable innovation’s true ramifications on society and on our humanity. But at this particular moment in history, we are experiencing something different that requires us to immediately, like never before, contextualize what is happening with your personal biometric autonomy, the very image and embodiment of all things ‘you’, and generative explicit pornography being made possible by products and services that were effectively not available to the public at scale until less than a year ago.

NOTE: For those of you now wondering if I’ve suddenly and abruptly become a celibate conservative prude, rest easy, I have not. Sex is extremely healthy. Porn is fine (in moderation, you sickos). “Some of my best friends are porn!”

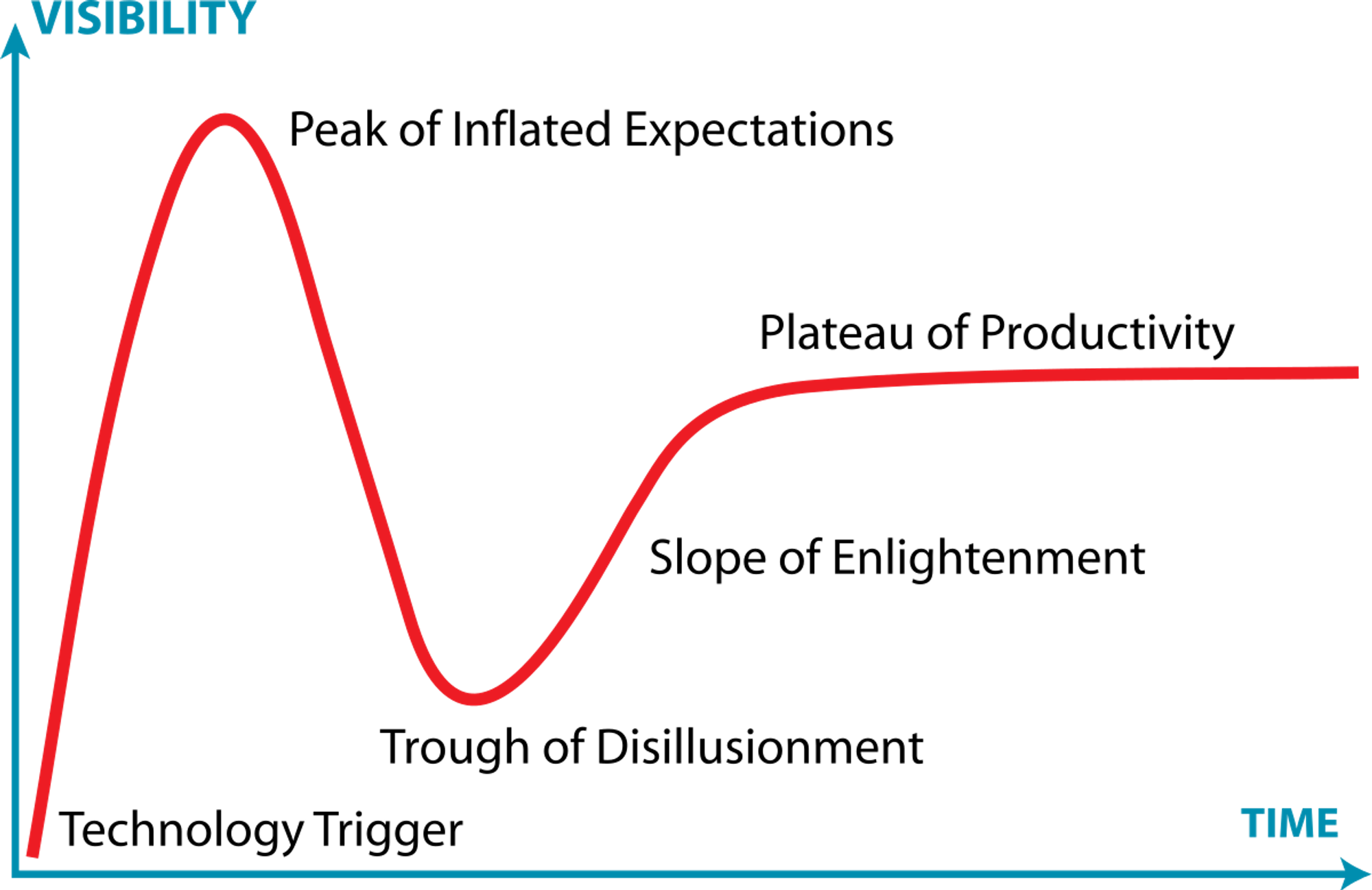

For those who haven’t spent over a decade around Silicon Valley venture capitalists micro-dosing ayahuasca and publishing techno-utopian manifestos, the Gartner Hype Cycle, is a chart that shows how new technology is adopted. You can see it below courtesy of quaint ‘ol Wikipedia.

My TL;DR Version of the Gartner Hype Cycle:

Something new and exciting drops, people YOLO into it, then they go “meh” but other people go “eh”, the “eh” people slowly make “meh” people into “eh” people, and then everyone says “eh” and then one day in 2014 you own an iPhone that suddenly has a really bad U2 album on it that you didn’t buy and that you can’t delete.

The Gartner Hype cycle is important to frame what is happening now because we are, by any and all accounts, at the Peak of Inflated Expectations with generative AI, and we have quite unarguably reached that point faster than with any previously deployed new technology because anyone, anywhere, with a web browser, can access it. Just last week OpenAI reported that 92% of all Fortune 500 companies now use ChatGPT. It came out one year ago.

Our collective experience with the imminent trough of disillusionment won’t come because Claude, Bard, Perplexity, ChatGPT, or any other chat bot gives some lawyer fake cases to cite in court. It won’t come because you prompted Midjourney to create a birthday card for your boss that has them riding a dragon into the office, and it won’t come because you used Adobe Firefly to edit your ex out of that one photo you like better without them in it. It’s going to arrive, and indeed has already arrived as one of the new four-horsemen of the digital apocalypse: deepfake pornography of real people with little or no connection to the creator of the content.

Now, you may be thinking that you have heard of deepfakes, and you have seen those funny videos of some celebrity, politician, or other public figure doing something out of character, or that photo of the Pope wearing that dope jacket, and this is not really something that YOU need to worry about. You’re not a celebrity, politician, or public figure, surely why would this sort of thing concern you? We’ve been warned about this stuff for years and nothing really bad has happened. Right?

At least that’s what the men reading this are thinking.

If you’re a woman, you are probably attempting scrub the entire internet of any photos or videos of yourself from every platform you still have access to before you can even finish this sentence.

Hyper-realistic digital forgeries have been possible for some time, but until the past few months, they have been almost exclusively the domain of major Hollywood movie studios, special effects companies, and other people with lots of processing power, lots of expertise, and even more time on their hands, and mostly to make old actors forever young on the silver screen. Disney de-aged Mark Hamill by 40 years on The Mandalorian and had him fight evil droids and made it look pretty cool. Netflix made Robert DeNiro (kind of but not really) look younger for The Irishman and made it look pretty bad. Michelle Pfeiffer was de-aged in one or all of the Ant-Man movies to an effect I can’t comment on because I haven’t seen those movies or at least can’t remember seeing them.

But now, anyone, anywhere, with free or nearly free software and websites can take your face and put it on someone else’s body in whatever videos, photos, or invented generative images they can think of and access.

All they need is your Instagram. Or Facebook. Or TikTok. Or LinkedIn. Or YouTube. Or Tumblr. Or Reddit. Or Google. Or Tinder. Or Bumble. Or Grindr. Or OnlyFans. Or Patreon. You get the picture. Or rather, everyone gets the picture (or video) of you.

Privacy has been dead for a while, but our biometric personal autonomy has remained relatively intact. It was hard to take the face of a woman you saw on a dating app who didn’t match with you and put it on the body of an adult industry performer, well, performing. It was hard to zoom in on a group photo from that one birthday party last year, crop out the face of a woman you find attractive, and put it on the body of a tentacle alien banging a goblin in the likeness of the eleventh grade english teacher. And now, it’s not hard at all. In fact you can do it right now with no technical expertise whatsoever. And so can anyone else.

NOTE: The tentacle alien / goblin scenario is real, as are other equally as outlandish and disturbingly dark parts of the human psyche has been pouring into private Discords. I did not make that up. No, I am not sharing the link.

Deepfake porn is growing at an exponential rate. The internet was built on the backs (no pun intended) of adult industry performers and sex workers while they were publicly demonized and shamed for doing their jobs and quietly earned untold billions of dollars for the most trafficked websites on the internet by the same people who demonized and shamed them. And now that shame will come for us all, and tragically, mostly for those who identify as women, without their knowledge, and without their consent. Women who never performed in adult movie, or had a leaked sex tape, or flashed that one time in college during Mardi Gras at the wrong time next to a big black bus with ‘Gone Wild’ or something written on it. Girlfriends, wives, sisters, mothers, cousins, aunts, grandmothers. Anyone who has their face or even their fully clothed body anywhere on the internet is vulnerable to the psychological trauma, reputational damage, and invasion of their personal autonomy.

We aren’t just entering, we have already entered a period of digital exploitation that can affect every woman who has ever put herself on the internet. How does one even define consent in an era where most people have freely given up their likeness to platforms whose terms of service claim they now own those likenesses? How is it possible to prevent random creepy men, and I’m sorry but most of the offenders here will be creepy men, so sue me fellow men, from taking the content of any woman they deem to be attractive, and using one of the many, and I mean MANY hundreds if not thousands of tools now at their disposal to create pornographic, violent, or other insidious content of women they just happen across on the whole of the Internet? It’s not even a question of whether or not some guy is taking a photo of a girl in his college class, who has never even spoken to, and generating an explicit photo of her for his personal use, or if he is sharing it with his group chat, or posting it publicly literally anywhere on the internet that will accept that type of content, which is almost anywhere you can think of, and more likely, millions of places that you cannot and never will.

This is not to mention that the content being used to create the generative non-consensual works of real people, often also use real people’s work without their consent. Whether in training data or as the direct “put X face on Y body in this video”, actual human sex workers and adult performers had their bodily autonomy used for something that they also did not consent to. Whatever your views on sex work and adult performers generally, it can’t be argued that they agreed to have their body of work (no pun intended) wholesale used in ways they did not consent to. They are also victims in these use cases.

Because it’s so heinous, I’ll only mention it briefly, but for underage women, these types of generative AI "creative" violations are already upending their entire lives. And not because they texted an explicit photo or video to a boy they liked that he shared with the school, which while incredibly and unspeakably harmful, the inciting content was at least recorded with their knowledge, regardless of laws around underage consent, which are well established and need not be addressed here. There is NO LAW against creating novel graphic sexually explicit images of women without their involvement, knowledge, or consent. The laws that do exist are hard to enforce and woefully inadequate for a time when anyone can go to any of the ~40 websites I was able to find in less than a minute on Reddit in doing research for this piece, and put the face of any woman on a generative fully nude body that approximates or “enhances” that of the body of the real person. Or put them in a psycho-sexualized animated horror scenes. It takes seconds to generate this content. Where does that content end up? Does it matter if it’s for “personal use” or if it’s spammed in 4Chan? There’s an obvious spectrum of abuse here, but in either case, there was no knowledge or consent.

For especially lazy offenders, some services and platforms are even offering ‘bounties’ that they can post to have someone else do it for them. Too lazy or scared to use some of this widely available technology yourself? You can find someone to do it for you. And you can find them quickly. Or you can go to any internet forum that deals in these sorts of things, which are so numerous that I don’t think anyone could quantify them if they spent the rest of their waking lives trying to. 404 Media is actually doing great work on this subject and I encourage you to read them as a journalist founded and owned publication.

Once again, it’s important to emphasize, this technology is not new. What’s new is the scale, speed, effectiveness, and ease of the technology for anyone to use with no barrier to entry. I attended the TED AI conference in San Francisco a few weeks ago and listened to some of the world’s brightest minds, philosophers, engineers, and scientists, some of whom have spent decades in some cases, deriding the coming global shift once we hit the inevitable AI inflection point. They talked about every conceivable outcome for the world, both good and bad. Except this issue. It wasn’t mentioned once.

And yet, the cat is out of the bag, the horse is out of the barn, the genie is out of the bottle, the beans have been spilled, the Rubicon has been crossed, the die is cast, and Pandora’s box is open for business. Insert additional tragic idiom here.

I don’t have a solution aside from creating a worldwide system in which all images and videos created are officially logged, subject to verification, and authenticated on a permanent global and non-fungible ledger where any individual content’s metadata informs whether or not something can be fed into a generative system. Which is a great conceptual idea I may write about in the future but is basically a pipe-dream and also a scenario in which we find ourselves in 1984 and subject to governmental sanction of our biometric digital likeness and creative works. Not ideal.

This is a Public Service Announcement. An FYI for you to share with people you know and care about, because not enough people know what is happening and what sort of exposure they have to this sort of behavior, which is to say, a lot of exposure if they have been online most of their lives as most people have at this point.

So what can you do? Make it all private? Well, if you think your private social profile with only a few hundred followers doesn’t have at least ONE man who will try to use your content for inappropriate generative content in the future, well, I’d say you are naive and may one day wake up inside of your own personal Black Mirror “Joan Is Awful” episode.

Delete it all. Burn it down. Scrub it clean. Blur yourselves into oblivion. Cover yourselves with your favorite emojis. Whatever you do, your biometric personal autonomy is gone, and absent some global, legal and technological defensive Hail Mary miracle the likes of which the world has ever seen, it’s gone for good.

And please for the love of all things sacred in the eyes of the Flying Spaghetti Monster: Stop putting photos and videos of your kids on the internet.

Welcome to The Trough of Disillusionment.

wtflolwhy :: November 15, 2023